How to Shape Your Product Roadmap in 60 Seconds

As we have shown, customer feedback will steer us towards specific features (like smartphone cameras) that drive product ratings up or down. Today we’re going to focus on a question often heard in product development cycles - or any data project, really:

“So what do I do with all of this information?”

This time we’re going to explore the most important customer insights using a completely different set of products - automated cat litter boxes! While Google product review data provides the material for this analysis - our platform normally digests data from platforms like Zendesk, Salesforce, Kustomer, Gladly, and more.

Regardless of whether it’s survey response data, CRM integration data, website (or other) reviews, or call recordings, we process these customer conversations in the same way, cleanly separating products and features so that you can see what matters most to your customers.

The SupporTrends platform is powerful and adaptable enough to offer automatic recommendations across a wide variety of products. In this analysis, we’ll be comparing three products to the current best in the industry, the Litter-Robot 3 Connect:

Littermaid Cat Self-Cleaning Litter Box

Omega Paw Self-Cleaning Litter Box

Petsafe Simply Clean Litter Box

How we did it

As before, we processed the top 500 Google product reviews for each model listed above (which meant that automatic cat litter boxes that did not have at least 500 reviews could not be included). Google sorts these reviews in various magical ways, so while we have, presumably, the most relevant reviews for each product, we did not process all reviews for each product.

Let’s dive in!

First Glance

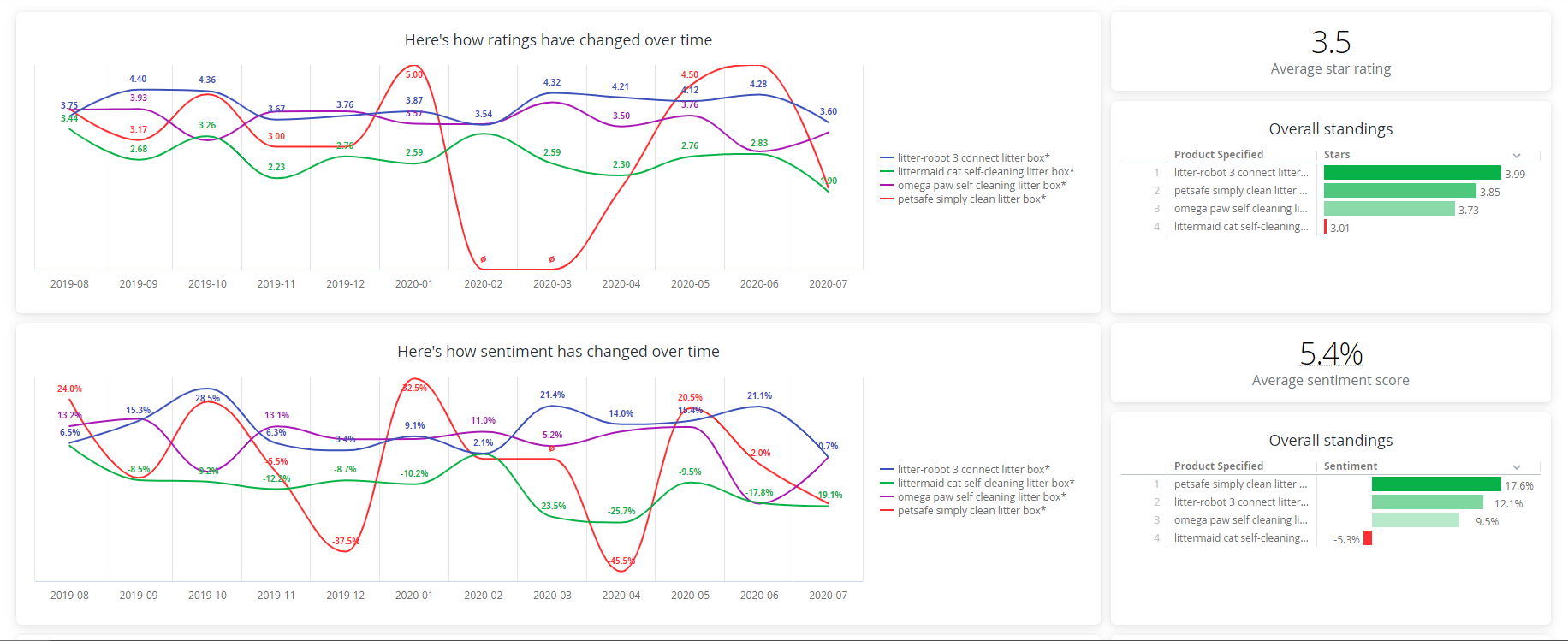

The Litter-Robot 3 Connect has the highest overall star rating in this data set at about 4 stars, compared with the average of 3.6 stars. The Petsafe Simply Clean Litterbox and the Omega Paw Self-Cleaning Litter Box are not too far behind in the high 3-star range. It’s also quite easy to see that one of the products receives substantially lower star ratings than its competition: the Littermaid Cat Self Cleaning Litter Box:

Our sentiment analysis - which analyzes the language used by customers to describe their product experience - shows similar results:

We can also see that the star rating distribution is quite different for one of the products than the rest. 31% of the Littermaid’s product reviews are of the 1-star variety; significantly above the others which are around 8-10%. It’s not uncommon for 1-star and 5-star review quantities to be higher than 2s, 3s, or 4s, but it’s not usually this extreme. So what’s the story?

We can select the Littermaid Cat Self-Cleaning Litter Box product and begin to isolate what’s going on. With just a single click, SupporTrends’ natural language AI tells us what we need to focus on first by automatically weighing the most important factors in customer satisfaction:

When we look at the top four results from our “What should we fix?” index (which evaluates the relationship between positive and negative feedback for a given product or feature), “litter”, “waste, “rake”, and “mess” stand out due to their high volume and very negative NPS impact estimation:

Wait, SupporTrends estimates NPS? Yes we do, and not only for a given brand or product. Our newest algorithms estimate the impact that specific features and categories have on NPS so our customers know what to fix first.

We’ll dive into the inner workings of these algorithms in a later post, but for now, let’s filter into those top negative influencers:

Many of the comments indicate that the rake, litter, waste, and mess do often go hand-in-hand. We can see some of they key comments are “stuff sticks to the rake…and makes a mess“, “nothing gets picked up by the rake“, “jams up the rake”, and “litter gets stuck in the rake”. You get the idea.

We also find that “waste” in this context actually has little to do with what the rake picks up (as we assumed it would!) and everything to do with the product being a “waste of money” as a result of the poor rake performance. In fact 77% of the total “waste” comments were related to issues with the rake. This dual meaning of the term “waste” highlights the importance of our platform’s approach to surfacing what’s important, and delivering the relevant context to go with it.

So we can see this group isn’t happy, and its filtered satisfaction metrics back that up:

Reading through this focused pool of comments is fast and easy now that our platform has isolated them from the noise. We can even see customers telling us what we need to do to fix the problem. Comments such as “rake is made of plastic and it is unable to lift”, “rakes for scooping the litter up are too short“, and “the rake…does not reach to the bottom of the pan“ can direct a product team to get straight to work on solving the right problems.

“the rakes for scooping the litter up are too short“

So the course is becoming clear just a few clicks into these reviews. But one question remains: what would happen if we fixed the problem (via a more powerful motor, stronger plastic, or any other the many suggestions)?

You don’t have to wonder since we can quickly remove those reviews with a few clicks! Here is a view of all of the reviews processed, as a refresher:

What follows is the same data set presented as if we “solved” the issue with the litter and rake. The Littermaid Cat Self-Cleaning Litterbox is finally in the green with a positive sentiment score of 7.0%, only slightly behind the Omega Paw Self-Cleaning Litter Box, and much closer to being inline with the other competing products. Additionally, you can see that the star rating distribution has changed from 31% 1-star reviews to 24% 1-star reviews.

The work is not done and solving this particular problem is not going to turn the Littermaid Cat Self-Cleaning Litter Box into a top-ranked Litter-Robot 3 Connect, but it’s a good place for the product team to spend some energy.

Below you can see both customer satisfaction indexes - star rating a sentiment - in detail. Solving the rake problem improved the Littermaid Cat Self-Cleaning Litter Box quite noticeably:

Star Rating

From 3.01 stars to 3.35

Sentiment score

From -5.3% to +7.0%

It’s important to note that “solving” the rake issue removes all of the reviews that cite the rake and litter (since we don’t know if that reviewer would have reviewed negatively again, reviewed positively, or not written a review at all). About 18% of those reviews were actually overall positive, so a real-life improvement in this area could yield even better results.

Clearly this is not the end of the analysis, but if we can zero in on a single important feature that customers want fixed, and deliver an estimated measure of the incremental improvement that the fix would have on overall customer experience in less than 60 seconds, then what can you learn about your products with a little more time?

Try it out for yourself, for free, here.